Dr. Meritxell Huch’s research and application of tissue regeneration and carcinogenesis, aka the expansion of tissues exterior to the body, is a significant advancement in developmental biology and the possibility of truly personalised medicine. Organoids are created via the cultivation of adult stem cells and growth of microscopic-functioning 3D models representative of cellular differentiation and collaborative function. This technology is currently being used to test the efficiency of drugs for the treatment of congenital and acquired diseases, and in the potential future as a grafting technique for the repair of dysfunctional organs.

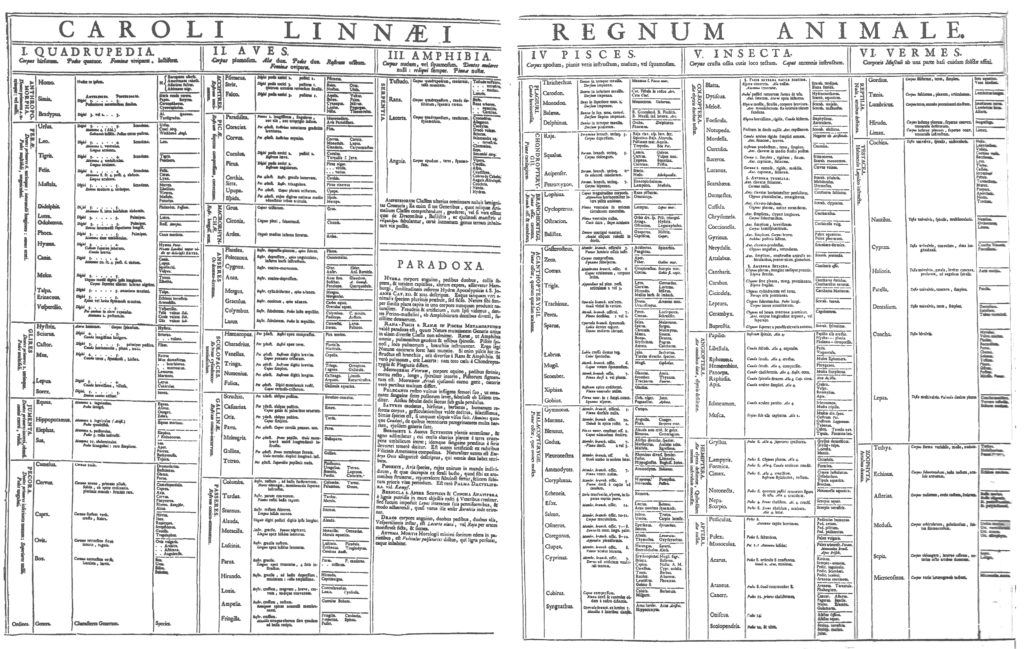

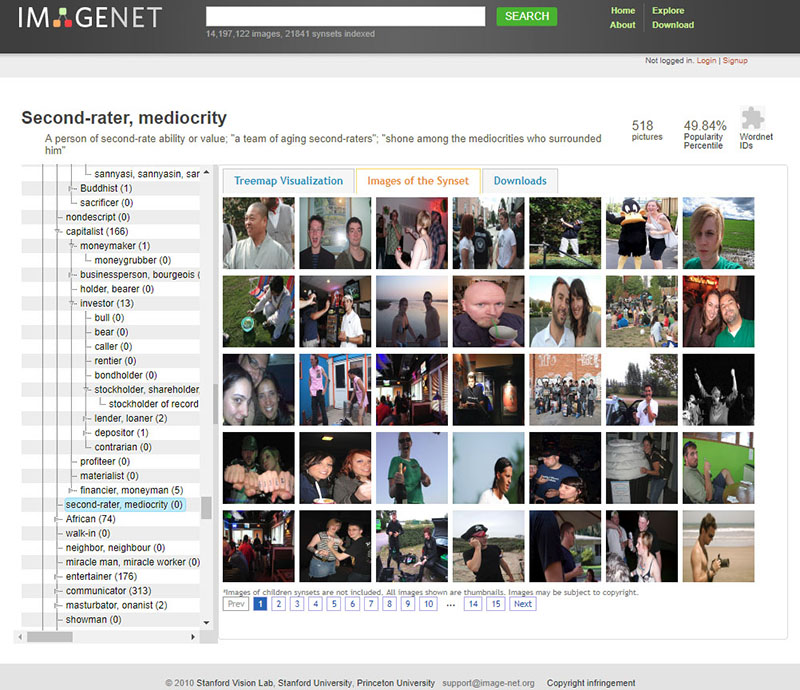

Language being a technology in and of itself, Dr. Huch’s use of figurative speech disrupts standard conceptions of taxonomy and gains agency as a determinant of practical dissemination. As late capital and globalisation have ostensibly undergone reification from analogical virus to reality, ex vivo tissue development conceives Deleuze and Guattari’s concept of “BWO’s” as metaphor to flesh. The description proposes a touchable condition, a surreal horizon of shifting bodies and migratory organs.

AT

What is your specific study and application in the field of tissue regeneration and carcinogenesis?

MH

In a nutshell it is how tissues develop, how they are maintained during adulthood and how our tissues repair – how they regenerate, basically. We try to understand that at a mechanistic level and to understand that is realising the liver as a model organ because of its huge regenerative capacity.

You can chop up to 70% of the liver and it will regrow from the remaining tissue that is there; very much like when you amputate the limb of a salamander it will regrow, so the liver is the only organ that can do it proficiently in increments.

We try to understand the mechanism, how the cells know that they have been damaged, how they know they have to react and when their regeneration is terminated. There are other tissues that regenerate but not like the liver. The stomach or the intestine in that sense, that is what they are constantly doing: automatic proliferation; but you cannot amputate a part and expect the remainder to grow. In addition, the liver does not do that except when it is damaged.

These questions have fascinated scientists for centuries, and model organisms have helped us gain further understanding on the process, however, we still do not know how humans regenerate their livers. As you can imagine, we cannot study that regeneration in humans because we can not do damage to people and check what happens. Because of that, we opted to first establish a system ex vivo, which recapitulates what happens in the organs in vivo, in the person, and now with the models in place, we are in a better position to ask that question. Yet, we must remember that organoids are not the organ, neither the organism per se, so we still have to improve them to be able to get a more holistic understanding of the problem.

AT

What’s the general size of an organoid? When does an organoid classify as an organ? Beyond self organisation and cell determination?

MH

A definition is a three dimensional structure of cells that have the capacity to organise by themselves, generate the structure and function similarly in many aspects – but not all – of the organ that they are mimicking.

AT

What aspects would it not recapitulate?

MH

It will not recapitulate for instance, vascularization. You cannot recapitulate inter-organ interactions.

Because you only have one tissue, sometimes it cannot recapitulate the whole function, sometimes it’s more like a premature pickle-like organ, sometimes it’s more like an adult organ but it’s missing some function of the tissue in the case of the liver, for instance… So, it’s not a complete organ, but the cells that compose that organ.

Love is Not Enough, Video, Alex Thake, 2020.

AT

How does the lab determine when growth begins and ends, could you extrapolate on what consists of the growth culture and how organoids are preserved and regulated? How does the lab determine spatial constraints?

MH

We understand growth as cellular division. When we can see that one cell goes from more than one, from one to two, two to four, six to eight.

In culture, the organoids, depending on the tissue – for instance, our intestine divides, the stem cells in the intestine divide every 24 hours.

In the case of the liver, the liver does not divide, in that case it merely divides every three weeks, every three months, even to one cellular division. But, in culture they divide much faster, because it recapitulates regeneration, more than a homeostatic state, more than the natural state. For the question as to how it is being regulated? The scientific community has gained some knowledge of the mechanism, but we are far from a full understanding of it. This is a question that we still don’t know much about, but we are working on it.

In other words, one of the things we know is that it’s kind of activating the regenerative program.

That’s why understanding regeneration mechanisms can help us understand how organoids grow, but also how the tissue grows, and understanding how the tissue grows can allow us to understand how it is regulated and also how organoids regulate growth. It’s a kind of feedback loop.

AT

What are the specifics of controlling cell fate determination, and ability of control?

MH

This is a very important question, we do not have answers to. I mean there are no answers to how you control cell fates.

So we know genetics is very important. But probably the cellular environment is equally important.

These are indeed very important questions for which we, as scientists, are asking ourselves as well and trying to find answers to. But, we don’t have them yet.

AT

So that’s the whole process in itself, that’s what you’re trying to determine?

MH

Indeed. So, a cell state or a cell fate is determined, is born with that. But, what we start to see is that cell fates are plastic. There is a kind of predetermination, but this predetermination or likelihood is not a fixed fit.

There are fates that are fixed. A neuron can hardly become any other thing, neurons are pretty fixed.

There are other things that are more plastic, we call that concept cellular plasticity.

And this concept, which means this ability to become something different, means to not have a fixed fate but the capacity to pervert to other fates, within the same tissue.

AT

That said, can an organoid be rerouted to become another organ?

MH

I like this question. Well, I would rephrase it slightly different: can you make one cell become something completely different? Going back to the example of the liver, think that an hepatocyte makes an hepatocyte. A ductal cells makes a ductal cell, and in some instances, an adult ductal cell can become an hepatocyte and vice versa… However, what can never happen is that an adult ductal cell can become an insulin pancreas cell.

This is crossing. Okay, this is crossing a moment during development that is passed – so we start from the zygote, we start from one single cell. This single cell makes many cells during the embryo development.

And as the tissues are specifying becoming brain, and becoming liver, and becoming pancreas, some genes are shut off forever and some might be opened to allow that cellular fate.

That means that a human pancreas can not become an intestinal cell, or a neuron. It seems that, the way our development happens, it puts a block in the expression pattern in the genome which is never going to go back to that past state. Yet, in the liver – within the tissue, if you need to go from one fate to the other – You still can, but of course, depending on the conditions.

AT

That’s due to proximity or environment?

MH

It has to do with the way that the genome organizes in a sense, we still don’t understand it on the molecular level, basically. This is a matter of huge investigation at the moment, how these fates are being closed during development.

Imagine it like that: You have five doors and you’re going to take door number three, which is going to make you become an hepatocyte.

Once you “cross” that door number three, you cannot go back, the door closes forever. So at a certain moment, there are no doors anymore and you arrive at your final fate, yet, depending on the tissue, there is some kind of plasticity, let’s call it flexibility, and you can become another cell type within the same tissue. But you cannot go back to something completely different, from another tissue, unless you genetically manipulate the cell.

To genetically manipulate you have to induce expression of genes that were not there, by introducing the genes. This is how Yamanaka made the fibroblasts, which can become pluripotent now. The fibroblasts can now become a zygote type of cell. Why? Because he found factors that are essential for that. For that state of pluripotency, for that state of becoming anything. He found the key to go back, unlock all these doors and return to the original state. But, you have to genetically manipulate it, it’s not natural.

AT

Could you say that an organoid produced from a particular subject is directly representational of the organ at time of collection or would an organoid need the “memory” of developmental function in coordination with other systems (vascular for instance) to be determined a replica?

MH

Ah, that’s a very interesting point. If we take it directly from a patient, I mean directly from a particularly subject – We know that it kind of retains – Many, many, many features – From transcriptional to kind of epigenetic memory of what it was, So, somehow it does indeed represent the organ at collection time. Whether the organ in vivo and organoid ex vivo would evolve in the same way, we do not know.

The coordination aspect is interesting, we actually do not know whether coordination with other systems is required to maintain this original status of the patient, basically, we don’t really know because we cannot put other systems in there yet. But is a future avenue some of us we are indeed exploring.

On the other hand, if instead of making organoids directly from the tissue we use cells from this patient and we do induce pluripotency, like what Yamanaka did – then we induce full reprogramming and for this reprogramming to pluripotent state you need to lose this memory, or at least, a great part of it.

AT

How is interorgan communication established between organoids? What is the most comprehensive inter-organoid system created thus far?

MH

This is not yet there, so it has not happened yet. The most comprehensive “inter-organoid system” –

It’s from a group in Cincinnati’s Children’s Hospital where they showed that they can recapitulate the liver, pancreas and biliary duct structure – from development, from the original organ primordium.

But, they can capitulate the development from the progenitor that generates these three organs to the generation of the three primordium structures.

Not the organ itself, but the primordium structures. It is a fantastic achievement indeed.

Recapitulating from adult tissue has not been attempted yet.

AT

So in terms of personalised medicine and developing therapies would you be able to apply the research from a single subject to a body of people with similar genetic disposition? Or would this technology be distributed on an exclusive basis? Could there be hypothetical libraries of organoid information?

Arcosanti Arch, phosphorescent pigment, binder, UV charge, Alex Thake, 2020, Image: Ainsley

Johnston.

MH

Let’s say personalised medicine goes in that direction. So, the long term vision of it is that you could have a tissue that is diseased, corrected it ex vivo, put it back and it will be from the same patient but corrected, so there will be no rejection because it would be from the same person. However, we are not there yet.

You can also think that there is a lot of shortage of donors in general, even in the liver. And now we can expand pancreas tissue and liver tissue, ex vivo – so we could actually generate a source of human liver, human pancreas tissue that we could put back into a patient instead of needing a full organ transplant.

We would infuse cells that are functional and with the aim or goal of the cells being grafted into the tissue and becoming functional parts of the tissue. In the liver, using mouse models, we demonstrated that this is possible with mouse liver organoids. You can grow mouse liver from one mouse, grow it in culture and transfer it to 20 mice. And we saw the cells got into the liver and they were made into functional cells. Human into human is going to be a long road to go.

Another thing that we’ve also done, also going in the direction of personalised medicine, now we can imagine one of these diseased patients is diagnosed with liver cancer. So, we can take a biopsy of the cancer, expand it in culture as if they were in the patient.

Now, you can imagine you have a patient who has a tumour, you can propagate each tumour ex vivo, enough to generate material that now you can test as many drugs as you want and these are drugs specific for this particular patient.

So this is what is true personalised medicine.

So you could have the disease, itself, in culture, ex vivo. Now test ten drugs, twenty drugs, thirty drugs and say, drug A, B and F are the good ones that will work for that patient and the others dont work. But, they might work for a second patient, for a third patient.

That’s why personalised medicine could not be done before, because we could not grow cells directly from the patient without manipulating them first. Now, that’s history.

We can grow tumour cells from the patient directly, without manipulating these cells and these tumour cells recapitulate many aspects of the tumours that the patient has inside of the body. Which means that it’s a better model to predict what would be the best treatment for our patient.

AT

Are these therapies competing or in congruence with CRISPR gene editing technologies?

MH

There isn’t a parallel but they can be complementary. So, CRISPR editing just manipulates the genome.

Imagine a page of a book, and you are missing a critical word and anything can be that word. So imagine you have a genome, it has a defect. Gene editing can correct that defect.

Okay, this is what you do in the genome, but then the important question is: which cell are you going to edit? In the book example, if you put the word on the wrong page, it still has no comprehensive meaning right?

Now, go back to the example of liver disease, a patient that has a liver disease, the disease is a subset of the population of the liver, not in the whole liver. Then, we need to correct the cell. So, the organoids give the cell types.

Gene editing is a tool to correct any defect in the genome, in whatever cell.

Organoids give you the specific cell where you are going to do the correction in a meaningful manner.

AT

Could you comment on contemporary applications of ex vivo expansion in regards to COVID-19?

MH

It is an application of the technology because now we can grow human tissue in a dish, now we can ask the question: does this pathogen, in that case the COVID-19 virus affect particular tissue.

Early on on the outbreak we learned that some patients had liver failure, the livers were collapsing from these patients – The reason for that was unknown, it could be either that the liver failure of these patients was caused by a secondary reaction to the inflammation everywhere in the body, or it was caused by the virus entering into the blood and as a target (to the liver). However, that question you cannot ask in the patient since you cannot monitor where the virus has entered. Neither take biopsies (especially on these sick patients) just for the sake of knowing whether the cells have viral particles. Here is where organoids, being an ex-vivo organ-like culture, could help.

Since we knew that our organoid culture system expresses the receptor for the virus,we collaborated with a group at Heidelberg who had patients that had the virus. So, because we had human liver organoids derived from human donors from patients that were healthy, that we had in a bank in my lab – we sent [the organoids] to Heidelberg for them to infect our cultures and answer the question whether actually the liver cells are targeted by the virus.

So it could be two options, either the virus is directly entering into the liver cells and killing them, or the virus is killing many cells that cause the liver to be very unhappy because it has to detoxify so much that it also results in liver failure.

So we can confirm, yes – the liver cells are targeted directly by the virus.

Detail, newspaper, silicon tube, ants, Picnic at Hanging Rock, Alex Thake, 2020, Image: Ainsley

Johnston.

AT

Do you have a timeline for when you anticipate predictive and transplanted organoid technologies to be widely implemented?

MH

Yeah, I mean that we have to think that all of this is relatively new in terms of human publication. It was only five years ago that we managed to grow human tissue ex vivo. So it’s actually a field that is really expanding at the moment. For instance, for cancer, it’s already been used to do predictive medicine and is starting to be used as a tool to tell which drug would be better for that particular cancer patient. It’s really early stages because it’s been scaled as only research, now it’s starting to be a bit embraced by pharmaceutical companies, to grow to the general public and make an impact it needs to be implemented. Not from little research labs like ours but from the government level.

I see it as a future in the next five-ten years.

If we can do that and it proves to be good predictive value maybe it will be implemented widely.

The transplant experiments we were talking about at the beginning, like the capacity of expanding out of human cells and then putting them back into patients – I think that is more the long-term future because there is still quite some improvement that needs to be done on the systems, and this improvement can only come from basic research.

The industry implements these things when they are over the age of development and there are little developments to be done.

For transplantation, cell therapy transplantation, I still envision maybe 15 years more. But who knows, 15 years ago, nobody (or very few) knew the word “organoid” neither used it as we do now… Even us, in our first manuscripts 10 years ago we were calling them “3D Adult stem cell cultures”, so the growth of this and, probably all, fields is exponential and it only takes one or two breakthroughs to get to the next level. Only time will tell…

Alex Thake received her BFA from Hunter College in New York. She currently resides in Frankfurt attending the Städelschule. Her installation considers and conflates preconceptions of technology, architecture and biology. Her most recent works have been investigating biological radicalism as response to repressive systems and the internal and external manifestations of failed utopias.

Dr Meritxell Huch obtained her PhD at the Center for Genomic Regulation in Barcelona. In 2008, moved to the Netherlands, to the Hubrecht Institute where she studied Stem cells. She made the ground-breaking discoveries that liver and pancreas cells can be expanded as organoids ex-vivo, and that these recapitulate many aspects of tissue regeneration in a dish. In 2019, she was awarded the Lise Meitner excellence research program fellowship from the Max Planck Society and moved her lab to the Max Planck Institute of Molecular Cell Biology and Genetics, in Dresden, where she focuses her research on understanding tissue regeneration, organ formation and their deregulation in disease.